In the same way that happens for any IT system, the main security goals are:

Frameworks like OWASP SAMM (Software Assurance Maturity Models) show us a way to approach such an assessment challenge. Having cloud-specific metrics and the right incentives in the organization hierarchy is a must to successfully deploy and drive a security enhancement strategy.

We call "programme" that long term strategy and it encompass periodic assessment against the specific metrics to measure the progress and the effectiveness of the resources allocated to the programme.

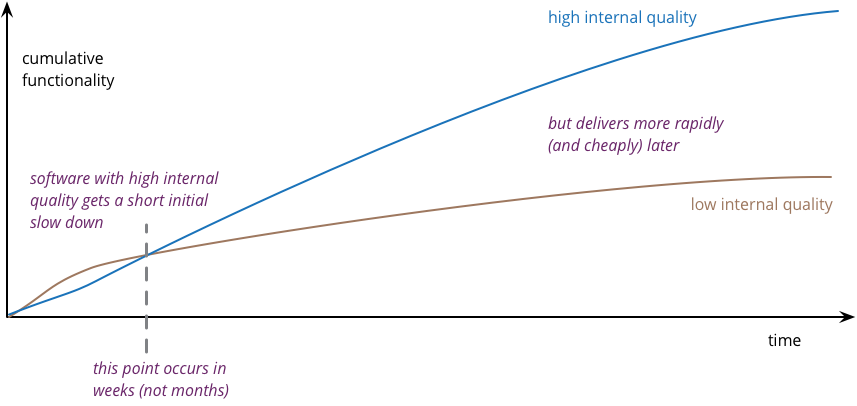

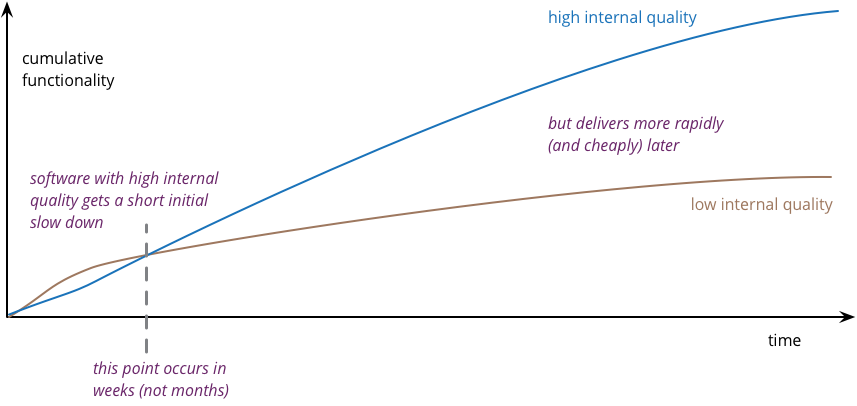

Having a single, central programme for maturity enhancement with clear goals will also boost technical resource reuse, experience sharing and wish to be more security-mature form the owners of the project itself. The extreme quality of the biggest cloud providers platforms and documentation falsify the dichotomy of security VS speed (cost) of development, at least in the longer term. Leveraging the vendor tools and best practices, but also applying security and quality principles to the software projects from the very beginning of the lifecycle will, always sooner, show the advantages in terms of ease of development of new features and operational costs of maintenance. Continuous delivery of new software features is key for most companies, and shortcuts in security and quality are traps for the business itself. This is clearly shown by Martin Fowler in the "Is High Quality Software Worth the Cost?" graph:

Personally, I like the approach to OWASP SAMM framework, but (rightly, being generic or "technology and process agnostic") it lacks practices definition for the specific architecture, in our case, cloud-based architecture. The "practices" term in the previous sentence can be specified in: entry-level, medium and state of the art/best practices. Those practices, when implemented in the project would be associated with level 1, 2 or 3 of the maturity assessment.

Well structured but generic practices, like those defined in SAMM framework, need to be identified in the context of the specific software infrastructure. It can be based on infrastructure as a service (IaaS), platform as a service (PaaS), serverless a.k.a function as a service (FaaS), hybrid on-premises and multi-cloud-vendor or combinations e of those. Classifying design security principles based practices, brainstorming, experience, documented best practices and threat modeling are among the ways to identify (and implement) specific security practices that can be associated with the technology-agnostic practices structured by the assessment framework. This way we are shifting from project "checklists" to a structured approach, needed for an effective long term security strategy.

Following is a list of principles. Nested cloud-specific annotations are helpful to accomplish the principle:

- Assess the risk level

- Protect assets reducing risk through mitigation strategy

...both goals in the most cost-efficient and business enabling way.

To assess a cloud-based system architecture security posture we need to identify and structure a well-defined set of dimensions (metrics) that can be measured against such cloud system. Having that risk assessment then will drive the development and risk governance strategy including refactoring efforts in a more efficient and coherent way. This is true whether we are considering a single system or, especially, a set of independent systems. This is key in larger, more structured organizations.Frameworks like OWASP SAMM (Software Assurance Maturity Models) show us a way to approach such an assessment challenge. Having cloud-specific metrics and the right incentives in the organization hierarchy is a must to successfully deploy and drive a security enhancement strategy.

We call "programme" that long term strategy and it encompass periodic assessment against the specific metrics to measure the progress and the effectiveness of the resources allocated to the programme.

Having a single, central programme for maturity enhancement with clear goals will also boost technical resource reuse, experience sharing and wish to be more security-mature form the owners of the project itself. The extreme quality of the biggest cloud providers platforms and documentation falsify the dichotomy of security VS speed (cost) of development, at least in the longer term. Leveraging the vendor tools and best practices, but also applying security and quality principles to the software projects from the very beginning of the lifecycle will, always sooner, show the advantages in terms of ease of development of new features and operational costs of maintenance. Continuous delivery of new software features is key for most companies, and shortcuts in security and quality are traps for the business itself. This is clearly shown by Martin Fowler in the "Is High Quality Software Worth the Cost?" graph:

Personally, I like the approach to OWASP SAMM framework, but (rightly, being generic or "technology and process agnostic") it lacks practices definition for the specific architecture, in our case, cloud-based architecture. The "practices" term in the previous sentence can be specified in: entry-level, medium and state of the art/best practices. Those practices, when implemented in the project would be associated with level 1, 2 or 3 of the maturity assessment.

Well structured but generic practices, like those defined in SAMM framework, need to be identified in the context of the specific software infrastructure. It can be based on infrastructure as a service (IaaS), platform as a service (PaaS), serverless a.k.a function as a service (FaaS), hybrid on-premises and multi-cloud-vendor or combinations e of those. Classifying design security principles based practices, brainstorming, experience, documented best practices and threat modeling are among the ways to identify (and implement) specific security practices that can be associated with the technology-agnostic practices structured by the assessment framework. This way we are shifting from project "checklists" to a structured approach, needed for an effective long term security strategy.

Software Assurance Maturity Model (SAMM)

This time-proven framework, now at version 2.0, is structured at the highest level, in 5 business functions and 15 technology-agnostic security practices:

Design Security Principles

Security principles are important because they can identify practices and adapt them to different technology-specific situations, eventually for all software architectures. In the realm of a system implemented inside a cloud provider infrastructure, those principles may be enforced by specific actions and tools. The "inside a cloud provider infrastructure" wording in the previous sentence expresses a key concept of the shared responsibility model, the security of the infrastructure itself will be managed by the cloud provider and is considered out of the scope of the security assessment, whether the security of the system being created is the real scope of the security analysis, and in general the whole programme. This may not always be 100% true, given the fact the even the cloud provider may have some risks and compliance implications. The assumption here is that the system is cloud-based, and that is compatible with high-level compliance requirements, in other words, this is considered a prerequisite. Also, the risk of an IT system in never zero, and hardly any non-cloud provider organization could guarantee a more secure infrastructure, exceptions may be military-grade and defence systems.Following is a list of principles. Nested cloud-specific annotations are helpful to accomplish the principle:

- Least privilege

- Minimum access (IAM policies, AWS security groups, microservices mutual authentication)

- Tokenization (it helps to de-scope, is the practice to use an otherwise meaningless token instead of a sensitive data, e.g. a token replaces a customer credit card number representation in some systems. There is usually one centralized tokenization service with higher compliance and security requirements).

- Level of data indirection (similarly to tokenization, but not centralized, a level of data indirection consists of replacing internal IDs with tokens held in a stateful session or decryptable only by the called service. Implementing a level of data indirection could be classified also a defence-in-depth practice).

- Defence in depth

- Redundancy checks at every layer, also for reusable components in microservices (an internal micro-service will likely be exposed later in time, once a wonderful Single Page Application will incorporate more logic, at this point the trust boundaries of the communication are changes and this may not be reflected by what data is trusted by the service a.k.a. not performing the required syntactic and semantic validation).

- Accountability

- Log/alerts for application events AND infrastructure changes.

- Fail-safe defaults and resilience

- Anti fragile, Capacity planning

- Chaos engineering

- Fault injection

- Automation

- Infrastructure as code (e.g. CouldFormation), avoid manual operations

- Detective controls (identify threats and incidents, with notifications and proper workflow).

- Avoid security by obscurity

- Use well knows tools, reputable components (e.g. key management, avoid self-made solutions).

Moving forward

In the next parts of the article we'll:

- Identify a set of architecture-specific security practices and associate them into the maturity model.

- Create a security programme to improve the security posture of an organization, business unit or project.

- Explore incentives to make the strategy effective from the top management and the specific projects.

Comments

Post a Comment